Even though I was hoping everyone would slip into a three day coma after the I2MM last week, it turns out, the world keeps churning and we still have a lot of things to keep plugging away out. No rest for the weary!

Half of our prayers were answered this week with the arrival of a new engineer at IU. John Graham comes to us by way of UKLight in Great Britain. Fortunately, he's very well versed in SONET- specifically Ciena equipment. We're very fortunate that John decided to join up and make the move to

Bloomington.

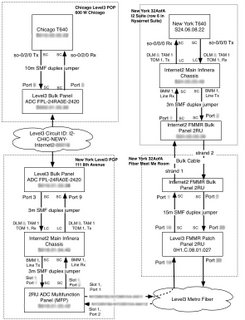

The Ciena buildout is just starting to get into action with our first few boxes shipped and installed over the past few weeks. John's arrival is perfect timing and he'll be running the Ciena show for the next several months. That frees up some cycles amongst the rest of us to keep our attention on the IP network transition and the Layer1 issues. John has already been instrumental in pointing out that the CoreDirectors are quite complicated beasts to cable up and that we should pre-wire the front panels to a separate fiber tray. It's that kind of real-world experience that will make the Ciena network a success.

Lots of meetings coming up. Level3 is bringing a whole slew of folks to Indianapolis on Wednesday to review our final operating procedures. We'll get to meet our dedicated support engineer that will be answering the phone when we call during business hours for Level3 technical assistance. There's been a lot of informality so far in handling these first few wave turnups, so we'll finalize the procedures that will enable us to move even

faster.

Thursday is a meeting to discuss implementation of the layer2 services. There's some good documentation floating around that we'll look over and discuss before presenting it to the community for input. I'm really interested to see what sorts of things people have planned for the Ciena network, as I'm sure is the rest of the team.

Next Monday is a meeting at Ciena to talk about control plane management and implementation. John Graham will be attending in person from IU, and Matt Davy and I will be joining via phone bridge.

The schedules are getting more focused as more information rolls in. Right now, it looks like Philadelphia and Pittsburgh installs will be the first or second week of January, though there are still a few niggling details that I expect to be sorted out.

Right now, we're hoping that MAGPI's 10G wave to New York from Philadelphia will be ready sometime next week. The suite sounds on track for delivery tomorrow and we have to get it accepted and run some fibers first. Hope to have that all taken care of by Friday.

It looks like the 710 North Lakeshore facility in Chicago is having a few issues with the overhead ladder rack not being ready in time, so the last I've heard, the availability of power has moved to next week. That's our top priority now since it's needed to move the Indiana Gigapop off the Indianapolis router. Once we free up Indy, we can ship it to Washington and the next phase of transition starts up.

That's all for now. Lots going on. Should have more to report shortly